In the past, we’ve had a few blog posts talking about specific parts of new WebRTC work that has been done in Asterisk; but, with the release of Asterisk 16, we need to talk about the real-life impact of this work under poorly-performing networks and the resulting video experience. Before we start, let’s dive into video itself, first.

Video for the use of communication has been increasingly incorporated into our lives over the past few years; from business meetings, to video-calling people on our phones, and even using it from our browsers, we’ve embraced it more and more. Video, however, has a cost. It can be high on CPU usage and it does not tolerate poor quality networks. While we all want to think that our networks are perfect, this is far from true. In reality, every network, at one point or another, encounters problems that can be disastrous to video. The most common symptom is freezing. With frozen video, the person to whom you’re talking suddenly stops moving and later recovers after a period of time. To better the user experience, WebRTC uses various technologies to reduce the chance of this happening. These technologies have now been implemented in Asterisk.

Picture Loss Indication (PLI) and Full Intra Request (FIR)

Excuse the picture, it’s me, frozen, grooving to music while writing this blog post.

When a video decoder is decoding a stream of video, it relies on the previous packets in order to decode the current packet. This presents a problem when packet loss occurs. Some codecs can recover, to a point, over time, and continue showing the video stream. Other codecs aren’t able to recover and will immediately stop. The result of this stopping is a frozen picture on your screen. As no one likes a frozen picture, RTCP provides mechanisms called PLI (RFC4585) and FIR (RFC5104) which allow a client to request a full frame. The full frame allows video decoding to resume, and the video that you see to start moving again. Asterisk now supports and forwards these mechanisms to allow video to be resumed in the worst of cases.

When packet loss does happen, you may see video freeze for a few seconds and then resume. This is much, much better than always-frozen video.

Negative Acknowledgement (NACK)

The use of PLI/FIR is a last resort mechanism in order to ensure video remains flowing. The preferred, primary mechanism is the use of NACK. NACK (RFC4585) stands for “negative acknowledgement” and allows a receiver to indicate that it never received a packet. Thus, the sender can resend the packet, which consumes less bandwidth than sending a full frame. It also allows a receiver to request multiple packets instead of just a single one.

Asterisk implements this using an RTP packet queue on both ingress and egress of video traffic.

Ingress re-ordering

By placing a queue on the reception of RTP video traffic we are able to ensure that packets are ordered according to their sequence number, before they reach the Asterisk core. This is a great benefit for Asterisk applications as they don’t need to be cognizant of out of order packets. While this is currently only done on video traffic this could be extended to other types (such as audio).

Ingress loss detection

The queue also allows us to see gaps in sequence numbers as they arrive. To handle the scenario where packets are merely out of order, and not lost, we only request re-transmission of packets after a certain number have been placed in the queue for later de-queueing. Once a high water mark inside the queue is reached, Asterisk requests re-transmission. Assuming the re-transmitted packets are received, Asterisk empties the queue as much as it can until a missing packet is encountered. If Asterisk never receives the re-transmitted packets, it empties the queue when it reaches a maximum limit and starts again.

Egress re-transmission

A queue on the egress of traffic allows Asterisk to accept requests from receivers for re-transmission of packets. Asterisk stores a fixed number of RTP packets based on sequence number, and resends them according to the received requests. This queue constantly has new packets added and old ones removed as video flows normally.

NACK is nice in that if it is working you never see the problems it is covering, which is why it is the preferred mechanism. If you use the “webrtc” option of PJSIP, NACK it is automatically enabled.

Receiver Estimated Maximum Bitrate (REMB)

Ever wonder what 30Kbps VP8 video looks like? Well now you know! That’s what’s being used in the above picture frame.

While packet loss and out of order packets are two items that negatively impact the video experience, an oft-overlooked one is bandwidth; not everyone in the world has a 1Gbps Internet connection. REMB is one of the oldest ways in WebRTC to help with this cruel fact. It allows a receiver to estimate and report back how much bandwidth is between it and the sender. This allows the sender to change the encoding bitrate to ensure it fits within the available bandwidth as best it can.

Asterisk now supports this in one of two ways:

For a simple call where you are merely talking to another person, Asterisk now forwards the REMB information from one side to the other. If the receiver says it has 2Mbps of available bandwidth, Asterisk informs the sender that it has 2Mbps of available bandwidth. This improves the quality of the call and if available bandwidth drops, should ensure that the video keeps on going as best it can.

For a conference bridge Asterisk has to do something different due to the fact that there may be multiple receivers of a video stream and not just one. In this case Asterisk combines all of the REMB reports to form a single one according to a configurable value (minimum, maximum, average). This single report is provided to the sender and the video encoding bitrate is changed accordingly.

To enable support for this in the conference bridge it needs to be explicitly done in the ConfBridge bridge configuration:

;remb_send_interval=1000 ; Interval (in milliseconds) at which a combined REMB frame will be sent to sources of video.

; A REMB frame contains receiver estimated maximum bitrate information. By creating a combined

; frame and sending it to the sources of video the sender can be influenced on what bitrate

; they choose allowing a better experience for the receivers. This defaults to 0, or disabled.

;remb_behavior=average ; How the combined REMB report for an SFU video bridge is constructed. If set to "average" then

; the estimated maximum bitrate of each receiver is used to construct an average bitrate. If

; set to "lowest" the lowest maximum bitrate is forwarded to the sender. If set to "highest"

; the highest maximum bitrate is forwarded to the sender. This defaults to "average".

When REMB is in use, you hopefully only see the quality of the video from other people improve until it reaches a stable point. If you have limited bandwidth, you may see the video quality change as people join or leave; or, if you have variable bandwidth, it may change throughout a call. REMB works to keep the video within the available bandwidth.

Put ‘Em Together!

All of these technologies together help to ensure that even in the worst of conditions, the video continues to flow; giving a better user experience, while hopefully being invisible. If you are using video on Asterisk you already gain some of these, and if you are using WebRTC with the “webrtc” option in PJSIP you gain the rest!

CPU Usage

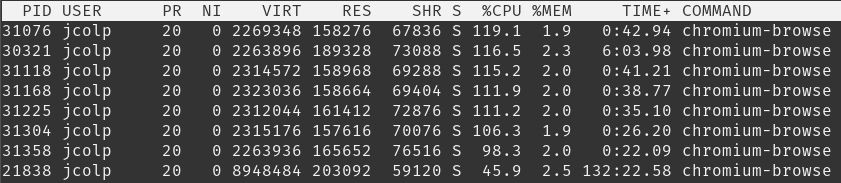

What do you get when you have 7 tabs open all in a joint video conference? A lot of CPU usage and a warmer office. Thankfully this was not on my laptop or I could have melted.

One area that Asterisk can’t outright solve is the CPU consumption involved with video. It is important to be cognizant of how many participants there are and the properties of the receivers involved. It may not be reasonable to expect a first-generation Chromebook to decode 4 streams of VP8 video while encoding its own video. The device itself may decrease its own encoding quality to try to reduce the strain on itself in an effort to keep up. This can work up to a point, but there will be a breaking point where the client just can’t handle it. So, take caution. 🙂