In the previous post, we:

- Picked out a bug to fix, ASTERISK-25179

- Signed up for an Asterisk account, signed a CLA, and created our profile in Gerrit

- Cloned Asterisk from its Git repo, installed its dependencies, built and installed it

- Run the Asterisk unit tests

After doing all that, we concluded that we’d be better off writing a functional test in the Asterisk Test Suite that covers our noxious little CDR bug, rather than attempting to write a C unit test. For that, we need to start by obtaining the Asterisk Test Suite.

Step 4: Get the Test Suite

Let’s get the Asterisk Test Suite downloaded. Like Asterisk, it also is hosted in Gerrit:

$ git clone ssh://gerrit.asterisk.org:29418/testsuite testsuite

Much like Asterisk, the Test Suite has a lot of dependencies as well. Rather than going through the installation procedure for all of these, we’ll instead focus on getting the “core” dependencies installed, as well as the ones we’ll need to run the CDR functional tests.

Most of the Asterisk Test Suite is written in Python using the Twisted framework (sometimes hidden quite effectively, other times, not so much). I’ll assume that you at least have Python and pip installed – if not, get those first!

Install the basic Python libraries we’ll need:

testsuite $ sudo pip install pyaml twisted lxml

Next, install starpy, which is an AMI/AGI client library. To stay on the latest, we’ll go ahead and install this from Github, which the Test Suite has a Makefile for:

testsuite $ cd addons testsuite/addons $ make update testsuite/addons $ cd starpy testsuite/addons/starpy $ sudo python setup.py install

Finally, we’ll want to install SIPp, a SIP test tool used extensively in the Test Suite. While SIPp is available as a package for many distributions, we’ll go ahead and grab the latest from the Github repo, as some tests in the Test Suite make liberal use of more recent SIPp features.

$ wget https://github.com/SIPp/sipp/archive/v3.4.1.tar.gz $ tar -zxvf v3.4.1.tar.gz $ cd sipp-3.4.1 sipp-3.4.1 $ ./configure --with-pcap --with-openssl sipp-3.4.1 $ sudo make install

If we’ve done it correctly, we should be able to see the SIPp version by running the following:

$ sipp -v SIPp v3.4.1-TLS-PCAP-RTPSTREAM ...

With these dependencies, we should be able to run the CDR tests.

Note: This is definitely not enough to run all of the tests in the Test Suite, as many of the tests use other third party libraries and tools. See the instructions on the Asterisk wiki for more information on installing other dependencies for the Test Suite.

Step 5: Run some tests

Now that we’ve got the Test Suite installed, we can see if it has any tests that should have caught this bug. The Test Suite is invoked via the runtests.py script. However, we don’t want to just kick off a run of the full Test Suite. To start, the Test Suite covers a lot of functionality that – with respect to our CDR bug – we don’t care about. It also doesn’t help that a full run of the Test Suite takes over four (!) hours. So we need to be a bit intelligent about what we run.

We can start out by listing all of the tests in the Test Suite by invoking runtests.py with the ‘-l ‘ option:

testsuite $ ./runtests.py -l

...

847) tests/udptl_v6

--> Summary: Test T.38 FAX transmission over SIP

--> Minimum Version: 1.8.0.0 (True)

--> Maximum Version: (True)

--> Tags: ['SIP', 'fax']

--> Dependency: twisted -- Met: True

--> Dependency: starpy -- Met: True

--> Dependency: fax -- Met: True

--> Dependency: ipv6 -- Met: True

--> Dependency: chan_sip -- Met: True

Unfortunately – at least for our purposes – that spits out a detailed listing of every test in the Test Suite, along with its dependencies. While that may be helpful if we were trying to figure out why a test didn’t run, that doesn’t help us if we only want to find and/or run the CDR related tests.

Another option is to list tests that are tagged as covering some aspect of CDR functionality. We can look at the available tags in the Test Suite by invoking the ‘-L ‘ option:

testsuite $ ./runtests.py -L

...

Asterisk Version: GIT-13-5a75caa

Available test tags:

accountcode ACL agents

AGI AMI answered_elsewhere

apps ARI bridge

ccss CDR CEL

chan_local chanspy confbridge

configuration connected_line dial

dialplan dialplan_lua directory

disa DTMF fax

fax_gateway fax_passthrough features

gosub GoSub hangup

hangupcause HTTP_SERVER iax2

ICE incomplete macro

manager mixmonitor mwi_external

page parking pbx

pickup PJSIP pjsip

playback predial queue

queues realtime redirecting

rls say SIP

sip_cause SIP_session_timers statsd

subroutine transfer voicemail

That’s a bit better. Now, if we really wanted to, we could invoke runtests.py with ‘-g CDR ‘, and run only those tests that affects CDRs. Let’s try that:

testsuite $ sudo ./runtests.py -g CDR ... --> Running test 'tests/cdr/batch_cdrs' ... Making sure Asterisk isn't running ... Making sure SIPp isn't running... Running ['./lib/python/asterisk/test_runner.py', 'tests/cdr/batch_cdrs'] ... [Jan 01 12:28:31] WARNING[4954]: astcsv:196 match: More than one CSV permutation results in success Test ['./lib/python/asterisk/test_runner.py', 'tests/cdr/batch_cdrs', 'GIT-13-5a75caa'] passed --> Running test 'tests/cdr/cdr_bridge_multi' ... Making sure Asterisk isn't running ... Making sure SIPp isn't running... Running ['./lib/python/asterisk/test_runner.py', 'tests/cdr/cdr_bridge_multi'] ... [Jan 01 12:28:36] WARNING[5113]: astcsv:196 match: More than one CSV permutation results in success Test ['./lib/python/asterisk/test_runner.py', 'tests/cdr/cdr_bridge_multi', 'GIT-13-5a75caa'] passed ...

When it’s done, we get … a really giant XML dump. Drat. So why did that happen?

The Test Suite is most often run from a CI system, such as Jenkins. It’s often useful to see all of the skipped tests in such systems, as it helps developers find build agents that are missing dependencies. Unfortunately, in this case, we purposefully skipped most of the tests in the Test Suite, which the output report dutifully tells us. Nuts.

Note: adding an option that removes skipped tests from the XML output would be a nice addition to the Test Suite.

We can still look at the top of the XML report to see if any of the tests failed:

testsuite $ head asterisk-test-suite-report.xml <?xml version="1.0" encoding="utf-8"?> <testsuites> <testsuite errors="0" failures="0" name="AsteriskTestSuite" skipped="800" tests="47" time="322.48" timestamp="2016-01-01T12:26:11 CST"> <testcase name="tests/agi/exit_status" time="0.00"> <skipped>Failed dependency</skipped> </testcase> <testcase name="tests/apps/agents/agent_acknowledge/agent_acknowledge_error" time="0.00"> <skipped>Failed dependency</skipped> </testcase> <testcase name="tests/apps/agents/agent_acknowledge/nominal" time="0.00">

Yay! None of them did. And we apparently ran 47 tests. But did any of them cover our CDR dialplan functionality?

If we grep for ‘cdr ‘ in our test output, we’ll get the following:

testsuite $ grep cdr asterisk-test-suite-report.xml

<testcase name="tests/cdr/app_dial_G_flag" time="0.00">

<testcase name="tests/cdr/app_queue" time="0.00">

<testcase name="tests/cdr/batch_cdrs" time="8.32"/>

<testcase name="tests/cdr/cdr_bridge_multi" time="4.73"/>

<testcase name="tests/cdr/cdr_dial_subroutines" time="6.88"/>

<testcase name="tests/cdr/cdr_manipulation/cdr_fork_end_time" time="11.33"/>

<testcase name="tests/cdr/cdr_manipulation/cdr_prop_disable" time="5.06"/>

<testcase name="tests/cdr/cdr_manipulation/console_fork_after_busy_forward" time="6.40"/>

<testcase name="tests/cdr/cdr_manipulation/console_fork_before_dial" time="7.80"/>

<testcase name="tests/cdr/cdr_manipulation/nocdr" time="4.22"/>

<testcase name="tests/cdr/cdr_originate_sip_congestion_log" time="3.88"/>

<testcase name="tests/cdr/cdr_properties/blind-transfer-accountcode" time="0.00">

<testcase name="tests/cdr/cdr_properties/cdr_accountcode" time="5.41"/>

<testcase name="tests/cdr/cdr_properties/cdr_userfield" time="5.58"/>

<testcase name="tests/cdr/cdr_unanswered_yes" time="4.96"/>

<testcase name="tests/cdr/console_dial_sip_answer" time="4.24"/>

<testcase name="tests/cdr/console_dial_sip_busy" time="3.78"/>

<testcase name="tests/cdr/console_dial_sip_congestion" time="3.82"/>

<testcase name="tests/cdr/console_dial_sip_transfer" time="4.28"/>

<testcase name="tests/cdr/originate-cdr-disposition" time="10.12"/>

<testcase name="tests/cdr/sqlite3" time="7.99"/>

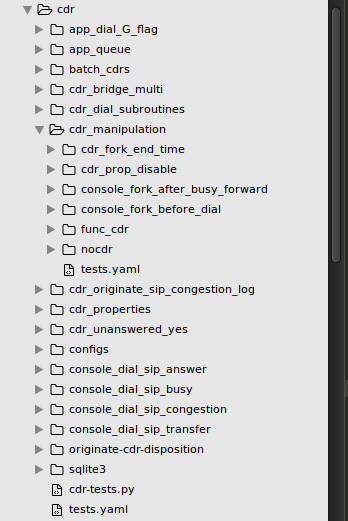

That’s better. Now we can see those tests that are in the tests/cdr subdirectory, or that explicitly call out that they test a piece of CDR functionality in their name. You’ll note that this is a lot fewer tests than the full 47 that we ran; that’s because many tests – such as those that cover basic Dial functionality – also produce CDRs that are verified. Since those don’t use the CDR dialplan function, however, we don’t need to worry about that as much.

As an aside, since we now know that we have tests in the tests/cdr subdirectory, we could explicitly run just those tests by using the ‘-t ‘/’–test ‘ option:

testsuite $ sudo ./runtests.py -t tests/cdr

But since we’ve already got our output, we’ll leave that for a subsequent run.

Looking at the CDR specific tests, it doesn’t look like we’ve got a test that covers the CDR dialplan function usage. We have some that cover ForkCDR , NoCDR , CDR_PROP in the tests/cdr/cdr_manipulation directory, but none that clearly jump out and say “I’m supposed to be covering CDR itself!” At least that explains why this bug slipped through – we don’t have a test for it anywhere!

Which means we get to write one next!

A slight aside on how we ended up in this state:

When Asterisk 12 was being developed, we knew that we would have to rewrite the vast majority of CDR functionality in Asterisk. This was due to the legacy CDR code being sprinkled throughout the codebase, most notably in the previous version’s bridging code. Since Asterisk 12 was providing a Bridging Framework, we had two options:

- Re-insert the CDR logic into the new Bridging Framework. This was unpalatable, as CDRs have long been considered an unmaintainble mess in Asterisk, largely due to the effect of masquerades in the bridging code. Pushing CDRs back into the now slightly cleaner Bridging Framework felt like a bad idea.

- Re-write most of the CDR logic in cdr.c, responding to state changes communicated over the new Stasis message bus. This felt like a good idea, but like all good ideas, had some downsides. Most notable of these was the loss of application specific ‘tweaks’ that previously existed for CDRs.

While we clearly went with Option #2, in order to try to accommodate as many of these tweaks and esoteric behaviours as possible, a bevy of CDR unit tests were written that covered the old behaviour, and were then updated for the new CDR core engine. One of these unit tests – test_cdr_fields – covers the API call that the CDR dialplan function uses. In fact, the CDR dialplan function is really just a facade over this API call. At the time, it was believed by the author * that this would be sufficient, and that a specific functional test for the CDR dialplan function was superfluous. Clearly, that was not the case, as the unit test did not prevent this bug. There’s a number of lessons in there. If nothing else, we should all take away that we should always write tests for everything, particularly when performing a major refactoring.

* Hint: It was me.

Step 6: Reproduce the bug by writing a test that fails

So now that we know that we do not have an existing test that covers the CDR dialplan function, let’s write one!

Before we start, I’m going to go ahead and make a branch in the Test Suite for this new test. Since the test is going to be covering ASTERISK-25179, I’ll call the branch that:

testsuite $ git checkout -b ASTERISK-25179 -t origin/master

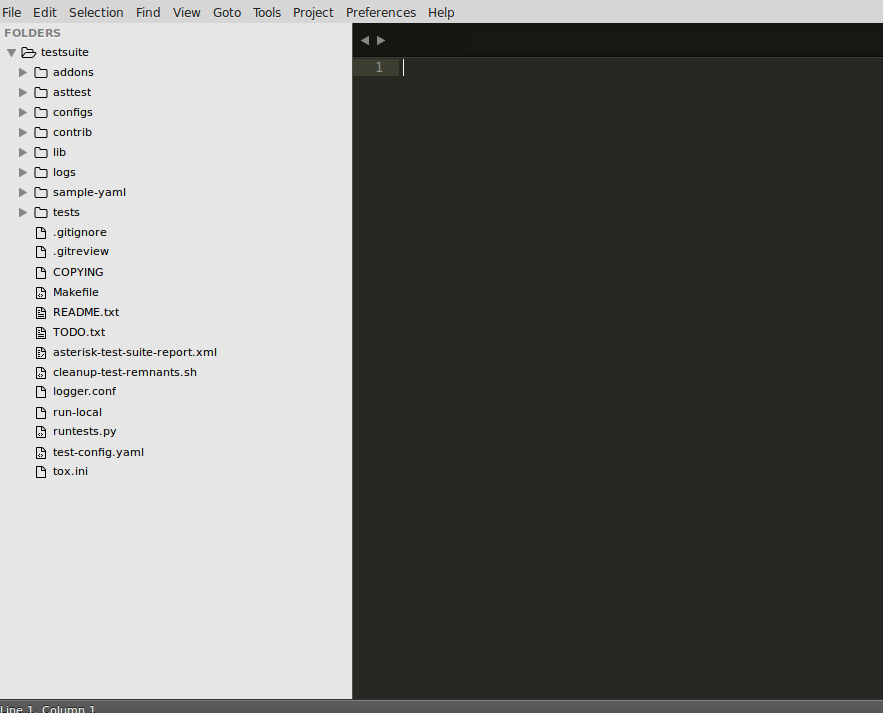

Now, fire up your favourite code editor (I’ll be using Sublime Text), and let’s look at the Test Suite:

There’s a lot going on here. For now, we’ll just highlight the most interesting items in the root directory:

- runtests.py : As we found out in the previous section, this is the Python script that actually finds, runs, and manages the tests.

- test-config.yaml : The Test Suite uses YAML for configuration. This configuration file provides global settings for all of the tests that run.

- lib : This contains useful libraries that tests can build upon. The vast majority of the Test Suite makes use of some functionality in here, in some way or another.

- tests : Where all the tests live. When runtests.py is invoked, it looks for this folder, and then for the tests.yaml configuration file that lives in it. That file dictates the tests and test directories runtests.py should look for. The script adds the tests in tests.yaml to its list of allowed tests, then follows the test directories, looking for additional tests.yaml files. When it runs out of tests.yaml files, it returns back the full list of all tests that it found.

So, if we want to add a new test, we first have to decide where we want it to live. If we expand out the tests directory, we can see we really have two options:

- tests/cdr – or more specifically – tests/cdr/cdr_manipulation . This is where the other tests that cover the CDR dialplan applications/functions live.

- funcs – which contains various tests for different dialplan functions.

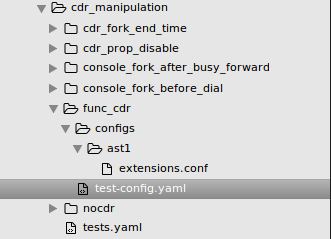

To me, option (1) sounds better and makes more sense. So let’s add a new folder, func_cdr , under cdr_manipulation:

In order for runtests.py to find our new test folder, we have to tell the tests.yaml in tests/cdr/cdr_manipulation that func_cdr is a valid test folder. Let’s open that file, and add our test to it:

# Enter tests here in the order they should be considered for execution:

tests:

- test: 'console_fork_after_busy_forward'

- test: 'console_fork_before_dial'

- test: 'cdr_fork_end_time'

- test: 'nocdr'

- test: 'cdr_prop_disable'

- test: 'func_cdr'

Now that runtests.py should be able to find our test, we need to add some basic items to it. At a minimum, that means providing a test-config.yaml that defines attributes of the test. However, it’s important to know that there are two ways runtests.py will invoke a test in the Test Suite. Depending on the path we choose, we’ll write a very different kind of test:

- The “classic” way: runtests.py will look for a file named ‘run-test ‘, with no file extension. That file will be launched in its own process, and can contain… anything. Most run-test files are Python, but some lingering Bash and Lua based tests remain. If this file exists, the test’s test-config.yaml mostly contains properties that document what the test does, and dependencies the test requires.

- The “new” way: if runtests.py does not find a file named run-test , it will look to the test-config.yaml file to tell it what objects make up the test. The test-config.yaml file specifies a Python “test object”, which acts as an orchestrator for the test. There are a variety of available test objects defined in the Test Suite, and new ones can be added by intrepid developers. At their heart, a test object is responsible for the orderly execution of Asterisk and managing the APIs that a test uses to drive itself. In addition, the test-config.yaml file can specify “pluggable modules”, which are bits of Python code that attach to the test object and do interesting bits in the test. This can be anything from spawning a call, to verifying AMI events, to checking CDR records.

Since our test merely wants to spawn a call, generate a CDR, and verify some properties, we’re going to go with Option (2), as we have plenty of test objects and pluggable modules that will suffice for that purpose. If we had a very esoteric test we wanted to write that required a lot of scripting, we may opt for Option (1) – but even in that case, the pluggable module approach is generally preferred.

With that out of the way, let’s add our test-config.yaml , as well as a blank extensions.conf . The extensions.conf needs to be placed in a configs/ast1 subdirectory, as the Test Suite looks for that folder for Asterisk configuration files that override the system/Test Suite defaults.

First, like any good developer, we’ll start off by documenting what our test does. For that, we’ll define a testinfo section, where we’ll provide a summary and a description :

testinfo:

summary: 'Test the CDR dialplan function'

description: |

'This test generates a CDR, and then uses the CDR

dialplan function to verify attributes on the CDR.'

Okay, so this may not be the best description ever, but it suffices for now.

Next, we’ll add our test object. But which test object to use? While there are many options, my plan for this test is to spawn a Local channel, Answer it, Hang it up, then look at the CDR properties in the h extension. Luckily, there’s a test object that fits this mold pretty well: the SimpleTestCase test object. By and large, all this test object does is spawn a sequence of channels and – when all of them are hung up – ends the test. Sounds close enough for our purposes. To define the test object, we need a test-modules section:

test-modules:

test-object:

config-section: test-object-config

typename: 'test_case.SimpleTestCase'

This section will tell the Test Suite to create an object of type test_case.SimpleTestCase , and feed it the parameters defined in the test-object-config section. Since that doesn’t exist, we’d better add it:

test-object-config:

config-path: 'tests/cdr/configs/basic'

expected_events: 0

spawn-after-hangup: True

test-iterations:

-

channel: 'Local/s@default'

application: 'Echo'

This tells the SimpleTestCase object a few things.

- It tells the test object that it needs to install some additional configuration files in tests/cdr/configs/basic . If we look in there, we’ll find a basic cdr.conf that a number of CDR tests use. While we could have just defined a cdr.conf in our configs/ast1 directory, since a common one has been provided for us, we might as well use it.

- We’ve set expected_events to 0. This will tell the SimpleTestCase test object to not look for UserEvents . In our case, we will later want to make use of a UserEvent outside of the test object, and we don’t want UserEvent specific logic in the test object to interfere with that.

- We’ve set spawn-after-hangup to True . The SimpleTestCase will look for one of two things to determine that an “iteration” is done: either a UserEvent or a Hangup event. In our case, a Hangup event will work just fine, so we tell it to look for that.

- Finally, we’ve defined what a test iteration is. We only need a single iteration, so we’ve only specified one in the list. In that test iteration, we want the test to spawn a Local channel to extension s in context default , and attach the other end of the Local channel to the Echo application.

Before we can start writing the dialplan for our test, we need to add one more section to our test-config.yaml : the properties for the test. All of the Python based tests – which ours is, even if we’re gluing things together with YAML – require starpy and twisted , so we’ll add those as python dependencies. We’re also making use of func_cdr and app_echo , so we’ll add those as dependencies as well. Finally, since Asterisk should fail under this test (once we’re done, at any rate), we’ll want a minimum version of 13.8.0 – which is the next unreleased version. Hopefully, our bug fix will be in for that release!

properties:

minversion: '13.8.0'

dependencies:

- python: 'twisted'

- python: 'starpy'

- asterisk: 'app_echo'

- asterisk: 'func_cdr'

tags:

- CDR

With all that put together, we can now write our test’s dialplan. Based on our test configuration, we know that we’re going to spawn a Local channel into s@default . For this test, at a minimum, we want to show that the f option on the CDR function is broken for the duration /billsec properties. While we’re here, we should also check that we get expected values for some of the other attributes the CDR function can extract. We also might as well check that a custom property set with the CDR function can be read by it.

To cover all of that, we can write the following dialplan:

[default]

exten => s,1,NoOp()

same => n,Set(CALLERID(name)=Alice)

same => n,Set(CALLERID(num)=8005551234)

same => n,Set(CDR(foo)=bar)

same => n,Set(CDR(userfield)=test)

same => n,Wait(1)

same => n,Answer()

same => n,Wait(1)

same => n,Hangup()

exten => h,1,NoOp()

same => n,Log(NOTICE, ${CDR(lastdata)})

same => n,Log(NOTICE, ${CDR(disposition)})

same => n,Log(NOTICE, ${CDR(src)})

same => n,Log(NOTICE, ${CDR(start)})

same => n,Log(NOTICE, ${CDR(start, u)})

same => n,Log(NOTICE, ${CDR(dst)})

same => n,Log(NOTICE, ${CDR(answer)})

same => n,Log(NOTICE, ${CDR(answer, u)})

same => n,Log(NOTICE, ${CDR(dcontext)})

same => n,Log(NOTICE, ${CDR(end)})

same => n,Log(NOTICE, ${CDR(end, u)})

same => n,Log(NOTICE, ${CDR(uniqueid)})

same => n,Log(NOTICE, ${CDR(dstchannel)})

same => n,Log(NOTICE, ${CDR(duration)})

same => n,Log(NOTICE, ${CDR(duration, f)})

same => n,Log(NOTICE, ${CDR(userfield)})

same => n,Log(NOTICE, ${CDR(lastapp)})

same => n,Log(NOTICE, ${CDR(billsec)})

same => n,Log(NOTICE, ${CDR(billsec, f)})

same => n,Log(NOTICE, ${CDR(channel)})

same => n,Log(NOTICE, ${CDR(sequence)})

same => n,Log(NOTICE, ${CDR(foo)})

same => n,Set(CDR(foo)=not_bar)

same => n,Log(NOTICE, ${CDR(foo)})

As we can see, we’re not doing too much here. We first give our Local channel a Caller ID, as this will affect a few different values in the resulting CDR. We then set a few attributes on the underlying CDR using the CDR dialplan function. Waiting a second between Answering and Hanging up the channel provides some delta between what we would expect the billsec and duration values to be. In the h extension, we go ahead and dump out all the values – plus, we change the value on our custom property for good measure.

Let’s go ahead and run our test:

testsuite $ sudo ./runtests.py --test=tests/cdr/cdr_manipulation/func_cdr

Running tests for Asterisk GIT-13-5a75caa (run 1)...

Tests to run: 1, Maximum test inactivity time: -1 sec.

--> Running test 'tests/cdr/cdr_manipulation/func_cdr' ...

Making sure Asterisk isn't running ...

Making sure SIPp isn't running...

Running ['./lib/python/asterisk/test_runner.py', 'tests/cdr/cdr_manipulation/func_cdr'] ...

Test ['./lib/python/asterisk/test_runner.py', 'tests/cdr/cdr_manipulation/func_cdr', 'GIT-13-5a75caa'] failed

<?xml version="1.0" encoding="utf-8"?>

<testsuites>

<testsuite errors="0" failures="1" name="AsteriskTestSuite" tests="1" time="5.23" timestamp="2016-01-02T20:15:52 CST">

<testcase name="tests/cdr/cdr_manipulation/func_cdr" time="5.23">

<failure>Running ['./lib/python/asterisk/test_runner.py', 'tests/cdr/cdr_manipulation/func_cdr'] ...

</failure>

</testcase>

</testsuite>

Yay! We wrote a test that fails!

Unfortunately, it fails because we didn’t tell it how to pass. Our test right now just executes some dialplan and returns; nothing informs it that the dialplan succeeded. In fact, our dialplan isn’t really testing anything – the CDR data could be absolutely terrible. In fact, if we look at the log file generated by the test, we’ll see that it is. Let’s go take a look.

The Test Suite will sandbox Asterisk on every run, including the log files that Asterisk generates. Open up /tmp/asterisk-testsuite/cdr/cdr_manipulation/func_cdr/run_1/ast1/var/log/asterisk/messages.txt in your favourite editor, and look for the dialplan execution in the h extension. If you scroll down far enough, you should see something like the following:

[Jan 2 20:15:57] VERBOSE[2289][C-00000000] pbx.c: Executing [h@default:16] Log("Local/s@default-00000000;2", "NOTICE, 2") in new stack

[Jan 2 20:15:57] NOTICE[2289][C-00000000] Ext. h: 2

[Jan 2 20:15:57] VERBOSE[2289][C-00000000] pbx.c: Executing [h@default:17] Log("Local/s@default-00000000;2", "NOTICE, 0.002000") in new stack

[Jan 2 20:15:57] NOTICE[2289][C-00000000] Ext. h: 0.002000

Oh look, we’re dumping out the duration and billsec . And with the f option, those values are way wrong. It’s a bug!

Reproducing the bug is a good first step. Now let’s make the test fail properly. To do that, we’re going to use a UserEvent from the dialplan to inform the test when something bad happens. The test will using a special pluggable module to validate the AMI events; in this case, not receiving a UserEvent will imply success.

First, let’s write a subroutine that validates our CDR attributes. We’ll make our subroutine take in four parameters: the attribute to test, the expected value, the operation we want to use to test the actual and expected value, and – optionally – an extra parameter to pass to the CDR function.

exten => validate_cdr_value,1,NoOp()

same => n,ExecIf($[${ISNULL(${ARG4})}]?Set(actual=${CDR(${ARG1})}):Set(actual=${CDR(${ARG1},${ARG4})}))

same => n,GoSub(default,${ARG3},1(${ARG2},${actual}))

same => n,ExecIf($[${GOSUB_RETVAL}=0]?UserEvent(Failure,Field: ${ARG1},Test: ${ARG3},Expected: ${ARG2},Actual: ${actual}))

same => n,Return()

There’s a lot going on here, so let’s break it down one line at a time.

same => n,ExecIf($[${ISNULL(${ARG4})}]?Set(actual=${CDR(${ARG1})}):Set(actual=${CDR(${ARG1},${ARG4})}))

Here we do the actual test of the attribute with the CDR function. If we have the optional attribute passed in (ARG4 ), then we invoke the CDR function with that attribute. Otherwise, we just invoke it with the required attribute (ARG1 ). The variable actual is set to the result.

same => n,GoSub(default,${ARG3},1(${ARG2},${actual}))

Here we’re invoking another subroutine, specified by ARG3 . (You can think of ARG3 as a function pointer, if you’re a C kind of person.) We’re passing that subroutine two values: ARG2 – which is our expected value – and the result of the invocation of the CDR function, actual .

same => n,ExecIf($[${GOSUB_RETVAL}=0]?UserEvent(Failure,Field: ${ARG1},Test: ${ARG3},Expected: ${ARG2},Actual: ${actual}))

Here we test the result of the GoSub in the previous line. If we get a result of 0, the comparison of our expected value (ARG2 ) to our actual value (actual ) failed. If so, we raise a UserEvent . Our test will listen over an AMI connection for that event; if it sees it, it will fail the test. If the test finishes and we don’t see a UserEvent , we’ll pass the test.

Based on our CDR attributes, we are going to want to test three distinct things:

- That the result of the CDR function is exactly equal to some expected value.

- That the result of the CDR function is something, that is, not NULL.

- That a floating point value returned from the CDR function is non-zero in both its integer and fractional parts (this will cover our bug in the duration /billsec attributes).

We can test equality using the following subroutine, which is pretty self explanatory:

exten => test_equals,1,NoOp()

same => n,ExecIf($["${ARG1}"="${ARG2}"]?Return(1):Return(0))

Likewise, testing that something is not NULL is trivial with the ISNULL function:

exten => test_not_null,1,NoOp()

same => n,ExecIf($[${ISNULL(${ARG2})}]?Return(0):Return(1))

Finally, we want to look at the parts of a floating point number, in our case, a time value, and make sure they are both non-zero. We can do that with judicious use of the CUT function:

exten => test_fp_not_zero,1,NoOp()

same => n,Set(integer=${CUT(ARG2,".",1)})

same => n,Set(fractional=${CUT(ARG2,".",2)})

same => n,ExecIf($["${integer}"="0" || ${ISNULL(${integer})}]?Return(0))

same => n,ExecIf($["${fractional}"="000000" || ${ISNULL(${fractional})}]?Return(0))

same => n,Return(1)

Now, we just need to change our code in the h extension to invoke our validate_cdr_value subroutine:

exten => h,1,NoOp() same => n,GoSub(default,validate_cdr_value,1(lastdata,,test_equals)) same => n,GoSub(default,validate_cdr_value,1(disposition,ANSWERED,test_equals)) same => n,GoSub(default,validate_cdr_value,1(src,8005551234,test_equals)) same => n,GoSub(default,validate_cdr_value,1(start,,test_not_null)) same => n,GoSub(default,validate_cdr_value,1(start,,test_fp_not_zero,u)) same => n,GoSub(default,validate_cdr_value,1(dst,s,test_equals)) same => n,GoSub(default,validate_cdr_value,1(answer,,test_not_null)) same => n,GoSub(default,validate_cdr_value,1(answer,,test_fp_not_zero,u)) same => n,GoSub(default,validate_cdr_value,1(dcontext,default,test_equals)) same => n,GoSub(default,validate_cdr_value,1(end,,test_not_null)) same => n,GoSub(default,validate_cdr_value,1(end,,test_fp_not_zero,u)) same => n,GoSub(default,validate_cdr_value,1(uniqueid,,test_not_null)) same => n,GoSub(default,validate_cdr_value,1(dstchannel,,test_equals)) same => n,GoSub(default,validate_cdr_value,1(duration,,test_not_null)) same => n,GoSub(default,validate_cdr_value,1(duration,,test_fp_not_zero,u)) same => n,GoSub(default,validate_cdr_value,1(userfield,test,test_equals)) same => n,GoSub(default,validate_cdr_value,1(lastapp,Hangup,test_equals)) same => n,GoSub(default,validate_cdr_value,1(billsec,,test_not_null)) same => n,GoSub(default,validate_cdr_value,1(billsec,,test_fp_not_zero,u)) same => n,GoSub(default,validate_cdr_value,1(channel,,test_not_null)) same => n,GoSub(default,validate_cdr_value,1(sequence,1,test_equals)) same => n,GoSub(default,validate_cdr_value,1(foo,bar,test_equals)) same => n,Set(CDR(foo)=not_bar) same => n,GoSub(default,validate_cdr_value,1(foo,not_bar,test_equals))

Let’s go ahead and run our test again:

testsuite $ sudo ./runtests.py --test=tests/cdr/cdr_manipulation/func_cdr Running tests for Asterisk GIT-13-5a75caa (run 1)... Tests to run: 1, Maximum test inactivity time: -1 sec. --> Running test 'tests/cdr/cdr_manipulation/func_cdr' ... Making sure Asterisk isn't running ... Making sure SIPp isn't running... Running ['./lib/python/asterisk/test_runner.py', 'tests/cdr/cdr_manipulation/func_cdr'] ... Test ['./lib/python/asterisk/test_runner.py', 'tests/cdr/cdr_manipulation/func_cdr', 'GIT-13-5a75caa'] failed <?xml version="1.0" encoding="utf-8"?> <testsuites> <testsuite errors="0" failures="1" name="AsteriskTestSuite" tests="1" time="5.58" timestamp="2016-01-04T10:50:06 CST"> <testcase name="tests/cdr/cdr_manipulation/func_cdr" time="5.58"> <failure>Running ['./lib/python/asterisk/test_runner.py', 'tests/cdr/cdr_manipulation/func_cdr'] ... </failure> </testcase> </testsuite> </testsuites>

Still failing without a cause. On the other hand, if we look at the events spilled out by Asterisk, we should now see two of our UserEvents in there. Open up the full log generated by Asterisk in /tmp/asterisk-testsuite/cdr/cdr_manipulation/func_cdr/run_2/ast1/var/log/asterisk/full.txt , and look for the Event: UserEvent . You should see two of them:

[Jan 4 10:47:21] DEBUG[4913] manager.c: Examining AMI event: Event: UserEvent Privilege: user,all Channel: Local/s@default-00000000;2 ChannelState: 6 ChannelStateDesc: Up CallerIDNum: 8005551234 CallerIDName: Alice ConnectedLineNum: <unknown> ConnectedLineName: <unknown> Language: en AccountCode: Context: default Exten: validate_cdr_value Priority: 4 Uniqueid: 1451926038.2 Linkedid: 1451926038.0 UserEvent: Failure Expected: Field: billsec Test: test_fp_not_zero Actual: 1

And:

[Jan 4 10:47:21] DEBUG[4913] manager.c: Examining AMI event: Event: UserEvent Privilege: user,all Channel: Local/s@default-00000000;2 ChannelState: 6 ChannelStateDesc: Up CallerIDNum: 8005551234 CallerIDName: Alice ConnectedLineNum: <unknown> ConnectedLineName: <unknown> Language: en AccountCode: Context: default Exten: validate_cdr_value Priority: 4 Uniqueid: 1451926038.2 Linkedid: 1451926038.0 UserEvent: Failure Expected: Field: duration Test: test_fp_not_zero Actual: 2

Progress! Now we just need our test to fail if it sees those UserEvents . To do that, we’ll use a pluggable module called the AMIEventModule . This is one of the most versatile and useful pluggable modules. It listens for AMI events, and, when it sees one that matches its configuration, performs some action. That action can be anything from pass a test, to fail a test, to execute some custom snippet of Python code. Let’s go back to our test-config.yaml file, and tell the Test Suite to load that pluggable module for us:

test-modules:

test-object:

config-section: test-object-config

typename: 'test_case.SimpleTestCase'

modules:

-

config-section: ami-config

typename: 'ami.AMIEventModule'

Note that we’ve added the modules section to the test-modules block. This expects a list of pluggable modules to load. We’ve added an entry in this list for the ami.AMIEventModule (ami being the Python package that provides the AMIEventModule ), and specified that it will use a configuration section named ami-config .

Let’s add the ami-config section next. This will be relatively straight forward: if we see any UserEvents , we want to fail the test. To do that, we’ll tell the AMIEventModule to look for an Event: UserEvent and that our expected count of said events is 0.

ami-config:

-

type: 'headermatch'

conditions:

match:

Event: 'UserEvent'

count: '0'

Now let’s see what happens when we run our test:

testsuite $ sudo ./runtests.py --test=tests/cdr/cdr_manipulation/func_cdr

Running tests for Asterisk GIT-13-5a75caa (run 1)...

Tests to run: 1, Maximum test inactivity time: -1 sec.

--> Running test 'tests/cdr/cdr_manipulation/func_cdr' ...

Making sure Asterisk isn't running ...

Making sure SIPp isn't running...

Running ['./lib/python/asterisk/test_runner.py', 'tests/cdr/cdr_manipulation/func_cdr'] ...

[Jan 04 11:19:01] WARNING[5455]: ami:192 __check_result: Event occurred 2 times, which is out of the allowable range

[Jan 04 11:19:01] WARNING[5455]: ami:193 __check_result: Event description: {'count': '0', 'type': 'headermatch', 'conditions': {'match': {'Event': 'UserEvent'}}}

Test ['./lib/python/asterisk/test_runner.py', 'tests/cdr/cdr_manipulation/func_cdr', 'GIT-13-5a75caa'] failed

<?xml version="1.0" encoding="utf-8"?>

<testsuites>

<testsuite errors="0" failures="1" name="AsteriskTestSuite" tests="1" time="5.53" timestamp="2016-01-04T11:18:56 CST">

<testcase name="tests/cdr/cdr_manipulation/func_cdr" time="5.53">

<failure>Running ['./lib/python/asterisk/test_runner.py', 'tests/cdr/cdr_manipulation/func_cdr'] ...

[Jan 04 11:19:01] WARNING[5455]: ami:192 __check_result: Event occurred 2 times, which is out of the allowable range

[Jan 04 11:19:01] WARNING[5455]: ami:193 __check_result: Event description: {'count': '0', 'type': 'headermatch', 'conditions': {'match': {'Event': 'UserEvent'}}}

</failure>

</testcase>

</testsuite>

</testsuites>

Alright! Now we have a properly failing test for our bug. Note that the test output tells us that we saw the UserEvent twice, which is more often than we’re allowed to see it.

Since we now have our test written, I’m going to go ahead and commit it:

testsuite $ git add tests/cdr/cdr_manipulation/func_cdr testsuite $ git add tests/cdr/cdr_manipulation/tests.yaml testsuite $ git commit

You should always write a good commit message for your commits – even if it is a test. The following is the commit message I wrote. While not excessively verbose, it:

- Provides a one line summary indicating where in the Test Suite we’re modifying things.

- Provides a brief description of the test. We aren’t going into a lot of depth here, but someone should have a basic understanding of how the test works from this.

- References the JIRA issue that caused this test to be written.

tests/cdr/cdr_manipulation: Add a test for the CDR dialplan function This patch adds a test that covers the CDR dialplan function. The test spawns a Local channel, sets some properties on the CDR via the dialplan function, then hangs up the channel. Logic in the 'h' extension then validates that the CDR contains the expected attributes. ASTERISK-25179 # Please enter the commit message for your changes. Lines starting # with '#' will be ignored, and an empty message aborts the commit. # On branch ASTERISK-25179 # Your branch is up-to-date with 'origin/master'. # # Changes to be committed: # new file: tests/cdr/cdr_manipulation/func_cdr/configs/ast1/extensions.conf # new file: tests/cdr/cdr_manipulation/func_cdr/test-config.yaml # modified: tests/cdr/cdr_manipulation/tests.yaml #

Once that’s saved, we’re on to the next step: fixing this bug.

Next time: in which we stop worrying and learn to love coding in C.